Introduction

Whilst reading Itzik Ben Gan’s excellent book on T-SQL I came across the COMPRESS and DECOMPRESS functions. These could be quite useful for some situations that I have, so decided to have a closer look.

Background

COMPRESS and DECOMPRESS were introduced in SQL Server 2016. The COMPRESS function uses the GZIP algorithm to compress the data that is supplied to it (character or binary). However, the DECOMPRESS function will only work against binary data, so the target from COMPRESS should be a binary column.

You can COMPRESS into an NVARCHAR column but the attempt to DECOMPRESS will produce the following response:

Msg 8116, Level 16, State 1, Line 2

Argument data type nvarchar(max) is invalid for argument 1 of Decompress function.

Test Environment

In the following example I create two tables – one for the uncompressed data, using an NVARCHAR column and the other table for the compressed data, with a VARBINARY column. I’ve deliberately kept the tables as simple as possible for the purposes of this demo.

CREATE TABLE dbo.WarAndPeace_Text

(

id BIGINT IDENTITY(1,1) NOT NULL,

TextData NVARCHAR(max) null

);

GO

CREATE TABLE dbo.WarAndPeace_Compressed

(

id BIGINT IDENTITY(1,1) NOT NULL,

TextData VARBINARY(max) null

);

GO

For test data I import the Project Gutenberg copy of “War and Peace” and import it into the ‘dbo.WarAndPeace_Text’ table. This provides me with just over 66,000 rows of data.

War and Peace imported as source data

Using COMPRESS

Now I use the COMPRESS function to load the data into ‘dbo.WarAndPeace_Compressed’. Because I know both tables were empty at the start I’m letting SQL take care of the ID column, happy that they will match across both tables.

INSERT INTO dbo.WarAndPeace_Compressed ( TextData ) SELECT COMPRESS(TextData) FROM dbo.WarAndPeace_Text ORDER BY id;

So first of all, look at the size of the two tables:

The size of the uncompressed and compressed data

The compressed data table is 1,552KB smaller.

How does look when comparing the individual rows?

Original row datalength and compressed datalength

This is where it gets a little more interesting – the row with the id of 8 is actually a longer length in the compressed format. This is possible with compression algorithms, so don’t always assume your data will shrink in length. However, the overall impact is a reduction in data length.

Using DECOMPRESS

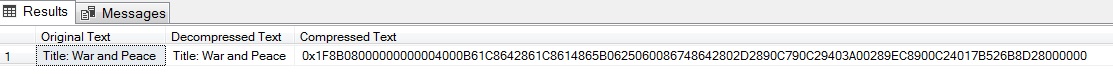

Now we can check the resultant data by using the DECOMPRESS functio, matching the ID column against the uncompressed table. As an aside, I’ll show what the data looks like in the compressed form:

SELECT t.TextData AS [Original Text], CAST(DECOMPRESS(c.TextData) AS NVARCHAR(max)) AS [Decompressed Text], c.TextData AS [Compressed Text] FROM dbo.WarAndPeace_Text AS t INNER JOIN dbo.WarAndPeace_Compressed AS c ON c.id = t.id WHERE t.id = 8;

Using the ID of the row data that actually looked longer in compressed format, the data has been returned as expected:

The text, uncompressed and compressed data

Pick another ID, to show that wasn’t a fluke:

Showing another row results

Conclusion

These are two useful functions, particularly if you are archiving large amounts of text data. However, test that you do get sufficient storage savings, as compressing data doesn’t always make it smaller.